Remember the players who had hundreds of teenagers playing Axie Infinity for them to earn Magic Love Potions?

Yeah, that was 4 years ago.

Now you could just train and spin up 100 Autonomous Codec Operators to play for you instead

...and monetize it and sell it to others

✅ AI x Robotics

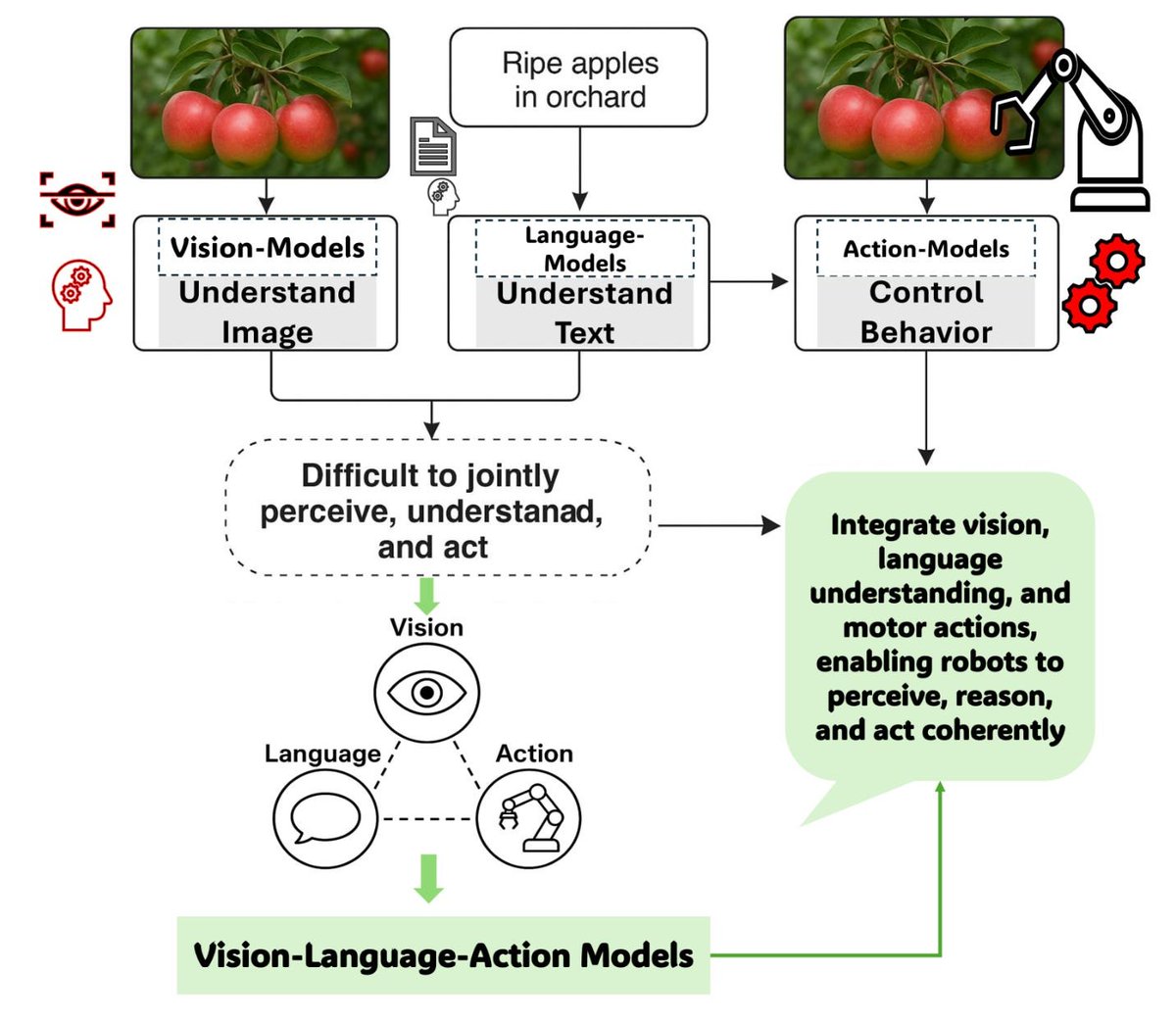

The AI x Robotics narrative is heating up for real with VLA models

At this stage in the AI ecosystem the majority of protocols and agents are using text based LLM engines or static screenshots to interpret data

But just remember that most of the real world doesn't have API access, you need vision, decisions and actions. The real world must be seen in pixels and this is where VLA models come in

@Codecopenflow allows for automation of software and robotics through vision using a tech stack built from scratch

✅ CODEC Operators

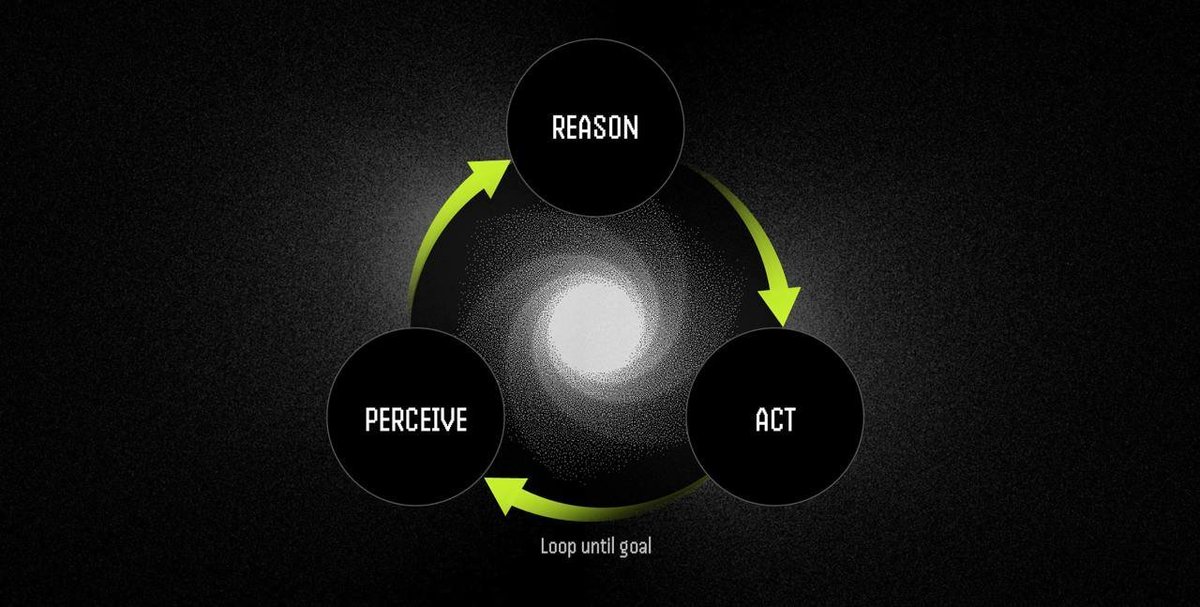

Operators are autonomous software agents that can perform tasks through a perceive-reason-act cycle. The ability to see the screen (or camera feeds or sensor data) allows them to make decisions LLMs would not be able to do

• Perception: Captures screenshots, camera feeds, or sensor data

• Reasoning: Processes observations and instructions using vision-language models

• Action: Executes decisions through UI interactions or hardware control

In a continuous loop

The Operators can run on bare-metal servers, Virtual Machines (on any operating system), or even on robots.

Each operator automatically gets a dedicated compute machine (isolated VM or container instance), and can be secured by TEEs (hardware-level isolation) for sensitive code and data.

✅ AI Intelligence Layer

The Operators can be configured to use one or more models (LLM or VLA) combined as their "brain"

For instance, pairing the low-cost Mixtral-8×7B language model with the open-source CogVLM vision model lets Operators read on-screen text and interpret live screen or camera feeds - all at a fraction of GPT-4’s cost.

A VLA model (Vision-Language-Action) lets the agent interpret visual input, and then decide on an action based on what it sees

✅ Use Cases

🔹 Desktop Automation

Can automate repetitive office tasks by controlling GUIs. Like filling in spreadsheets, update calendars or other tasks that requires GUI interaction

Can handle UI updates as it can actually see what it's doing

🔹 Gaming Agents

Operators can control players or test video games. The agents are streaming the screen and can make actions based on what they see by sending keyboard or mouse commands to the game.

Can be used for QA testing, or even advanced NPC opponents or automation of web3 games

🔹 Robotics

Operators can control physical robots. The machine layer will connect to a robots hardware with sensors and actuators, and the agent can send commands to move an arm or navigate.

E.g it could capture a camera feed of objects moving on a conveyor belt and make actions based on the movement. If there is an obstacle in the way the Operator can see it and control the robot to avoid it

✅ Data Collection and Onchain safety rails

By bringing the information of the Operators onchain to Solana they can offer immutable action logs from all actions performed

In the future we can see a point where Robotics companies would be required to stake a token to guarantee that their Operators would not make a robot make physical contact with a human over a certain force. If they breach it they would be slashed for a part of the staked token (like EigenLayer / Symbiotic restaking)

✅ Training environment for Robotics

With Codec, untrained virtual models can be deployed into a dynamic, high-fidelity training ground, no physical robot required.

Simulate, train, and refine complex behaviors at cloud-scale, then transfer those policies to real hardware with confidence.

Training environments can be quickly spun up for all type of Operators (Software, Gaming or Robotics)

✅ Codec SDK

A full SDK and API has been developed so devs can deploy their Operators easily

✅ Operator Marketplace

Operators can (in the future) be sold on a custom marketplace.

There will be a revenue split so that you can ship and monetize your VLA operator, meaning if you train effective Operators you could have additional revenue streams

✅ Concluding thoughts

I think we will see massive developments in the VLA field in the next year. We have seen how fast LLMs are developed, it was only a few years ago since GPT-1 was launched. Robotics and Vision Models will very likely become a hot narrative at one point in this cycle, and i like to be positioned early

Oh and did i mention that the co-founders is from Hugging Face and Elixir games 👀

Note: Slappjakke has large $CODEC bags, and this is one of those times i got even more bullish while writing this thread and added even more

This is as always not financial advice and a high risk investment, so do your own research.

8.92K

105

The content on this page is provided by third parties. Unless otherwise stated, OKX is not the author of the cited article(s) and does not claim any copyright in the materials. The content is provided for informational purposes only and does not represent the views of OKX. It is not intended to be an endorsement of any kind and should not be considered investment advice or a solicitation to buy or sell digital assets. To the extent generative AI is utilized to provide summaries or other information, such AI generated content may be inaccurate or inconsistent. Please read the linked article for more details and information. OKX is not responsible for content hosted on third party sites. Digital asset holdings, including stablecoins and NFTs, involve a high degree of risk and can fluctuate greatly. You should carefully consider whether trading or holding digital assets is suitable for you in light of your financial condition.